AI Assessmentool |

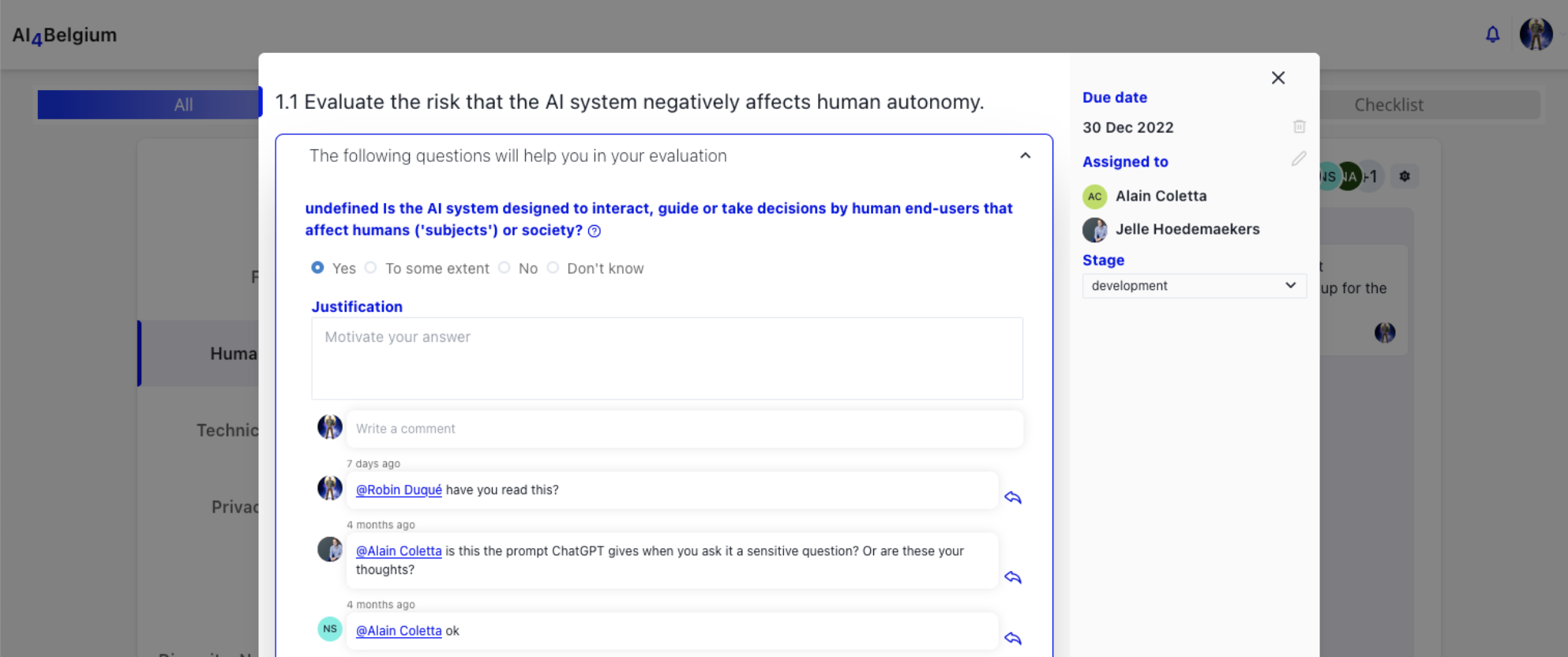

Welcome to our Open Source multidisciplinary and interactive online tool for assessing the trustworthiness of an organization's AI implementation. The tool is based on the ALTAI recommendations published by the European Commission and is designed to help organizations ensure their AI systems are transparent, robust, and trustworthy. Highlight Areas of RiskIn today's fast-paced world, organizations are increasingly adopting AI to streamline operations and improve decision-making. However, AI systems must be developed and implemented with caution, ensuring that they do not compromise fundamental human rights or perpetuate bias and discrimination. Our tool provides a comprehensive assessment of your organization's AI implementation, highlighting areas of strength and areas for improvement. Recommendations ReportYou will also receive detailed suggestions and guidance for improving the trustworthiness of your AI system. This will enable you to build and maintain trust with your customers, employees, and other stakeholders, and mitigate the risks associated with AI implementation. You are in controlOne of the key benefits of our open-source tool is that it can be hosted and fully controlled by your organization. This means that you can maintain complete ownership and control over your data and assessments. Host the tool on your own servers, you can also ensure that the tool meets your organization's specific security and privacy requirements. OPEN-SOURCE, modify and adapt the tool to fit your organization's unique needs. This flexibility and control make our tool an ideal solution for organizations looking to assess the trustworthiness of their AI systems while maintaining full control over their data and assessments. TRY THE DEMO INSTANCE The demo instance is a publicly available instance for trying out the AI Ethics Assessment Tool. Meet the AI4Belgium Ethics & Law advisory boardNathalie Smuha is a legal scholar and philosopher at the KU Leuven Faculty of Law, where she examines legal, ethical and philosophical questions around Artificial Intelligence (AI) and other digital technologies. Nele is legal advisor at Unia, equality body and human rights institution. She specializes in technology and human rights, especially non-discrimination. She is also active at the European level in her role as chair of the working group on AI of the European Network of Human Rights Institutions. Jelle is an expert in AI Regulation. He works as ICT Normalisation expert at Agoria, where he is focused on the standardisation and regulation of new technologies such as Artificial Intelligence. Within Agoria he also works on policies surrounding new technologies. Jelle also co-leads the AI4belgium work group on Ethics and Law, which looks at the ethical and juridical implications of AI on the Belgian ecosystem. I am co-directing FARI - AI for the Common Good Institute. This project is a joint initiative between Université Libre de Bruxelles (ULB) and the Vrije Universiteit Brussel (VUB).I am also an associate researcher at Algora Lab (UdeM, Mila, Canada) and an adjunct professor (UQAM, Canada). I have developed and published an AI Ethics Tool, and I work on the responsible use of technologies in healthcare. The more digitalised we live, the more we get personalised decisions based on our information. My goal is to uncover how these things work and get people to understand what happens with data. I find it curious that so little is known about data in the age of big data. My method consists of uncovering the hidden life of data by mapping these processes in easy to digest, texts, scenarios and visuals. We then use co-creation sessions to map current practices with end-users expectations, regulators or innovators. Yves Poullet was a rector at the University of Namur (2010-2017). He is a founder and former director of CRIDS (1979- 2009). He was also a member of the Privacy Protection Commission for 12 years. Nathanaël Ackerman is the managing director of the AI4Belgium coalition and Digital Mind for Belgium appointed by the Secretary of State for Digitalization. He is also head of the “AI – Blockchain & Digital Minds” team for the Belgian Federal Public Service Strategy and Support (BoSa). DescriptionThis tool was designed to enable team members with diverse expertise to collaborate and have conversations about key topics related to the trustworthiness of their AI implementation. Topics assessed

With the support of cabinet Michel and cabinet De Sutter. GitHub |